Anyone that’s had to configure the TLS/SSL settings for their VMware infrastructure will have probably come across William Lam’s posting on the subject. This provided a much needed script for disabling the weaker protocols on ports 443 (rhttpproxy) and 5989 (sfcb), but leaves out the HA agent on port 8182, and doesn’t alter ciphers – we are having to remove the TLS_RSA ciphers to counter TLS ROBOT warnings.

The vSphere TLS Reconfigurator utility does fix the TLS protocols for port 8182 (HA communications), but can only be used when the ESXi version is the same minor version as the vCenter, and none of the options will amend the ciphers being used. This was a useful posting I came across for amending the cipher list.

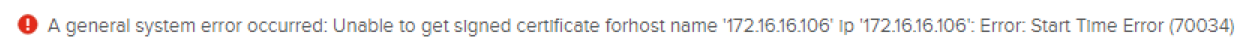

I did attempt to use the (new to ESXi 6.5) Advanced Setting – UserVars.ESXiVPsAllowedCiphers but it appears that this isn’t actually implemented yet. Certainly the rhttpproxy ignores the setting when it starts, and I have raised an SR with VMware to investigate this.

So I thought it might be useful to list the ports that tend to crop up on a vulnerability scan and what is required to fix them, in case there are elements that you may need to configure beyond what the usual utilities and scripts are capable of, such as standalone hosts.

I have only tried these on recent ESXi 6.0U3 and 6.5U1 builds

TCP/443 -VMware HTTP Reverse Proxy and Host Daemon

Set Advanced Settings:

UserVars.ESXiVPsDisabledProtocols to “sslv3,tlsv1,tlsv1.1”

If it’s ESXi 6.0 the following two are also needed:

UserVars.ESXiRhttpproxyDisabledProtocols to “sslv3,tlsv1,tlsv1.1”

UserVars.VMAuthdDisabledProtocols to “sslv3,tlsv1,tlsv1.1”

For the removal of TLS_RSA ciphers:

UserVars.ESXiVPsAllowedCiphers to

“!aNULL:kECDH+AESGCM:ECDH+AESGCM:!RSA+AESGCM:kECDH+AES:ECDH+AES:!RSA+AES”

The ESXiVPsAllowedCiphers setting does not work, instead manually edit /etc/vmware/rhttpproxy/config.xml and add a cipherList entry:

<config>

...

<vmacore>

...

<ssl>

...

<cipherList>!aNULL:kECDH+AESGCM:ECDH+AESGCM:!RSA+AESGCM:kECDH+AES:ECDH+AES:!RSA+AES</cipherList>

...

</ssl>

...

</vmacore>

...

</config>

Restart rhttpproxy service or reboot host

TCP/5989 – VMware Small Footprint CIM Broker

Edit /etc/sfcb/sfcb.cfg and add lines

enableTLSv1: false

enableTLSv1_1: false

enableTLSv1_2: true

sslCipherList: !aNULL:kECDH+AESGCM:ECDH+AESGCM:kECDH+AES:ECDH+AES

Restart sfcb / CIM service or reboot

From what I have seen, the default is to have SSLv3/TLSv1/TLSv1.1 disabled anyway.

TCP/8080 – VMware vSAN VASA Vendor Provider

Should be fixed by the TCP/443 settings

TCP/8182 – VMware Fault Domain Manager

Set Advanced Setting on the *Cluster* :

das.config.vmacore.ssl.protocols to “tls1.2”

Go to each host and initiate “Reconfigure for vSphere HA”

TCP/9080 – VMware vSphere API for IO Filters

Should be fixed by the TCP/443 settings